Using a video-driven tracking procedure, a correspondence is learned on a real speaker between any stream of phonemes and some phonetically-oriented facial motion parameters. This work has been initiated at the ICP for the project MOTHER (sponsored by France Telecom Multimedia). It is currently extended to english speakers and a complete model of the face.

Related articles

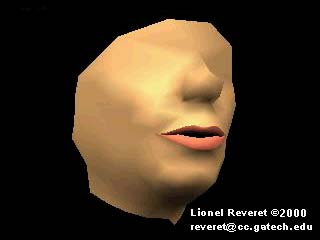

L. Reveret, G. Bailly, P. Badin

MOTHER: A new generation of talking heads providing a flexible articulatory control for video-realistic speech animation (PS.gz | PDF)

Proc. of the 6th Int. Conference of Spoken Language Processing, ICSLP'2000, Beijing, China, Oct. 16-20, 2000.