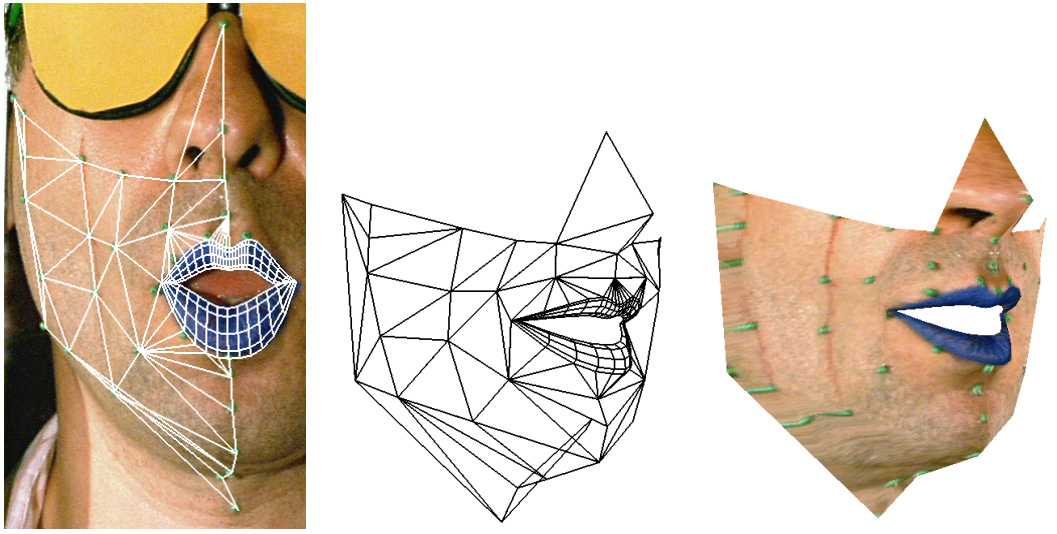

The 3D geometric lip model based on 30 control points interpolation,

and a smooth shading rendering.

The 3D geometric lip model based on 30 control points interpolation,

and a smooth shading rendering.

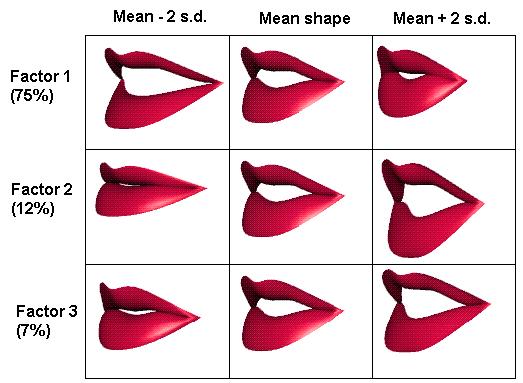

1. lip rounding, which separates rounded vowels and spread vowels,

2. lower lip motion, mainly correlated with jaw opening,

3. upper lip motion, to perform full closure for stop consonnants.

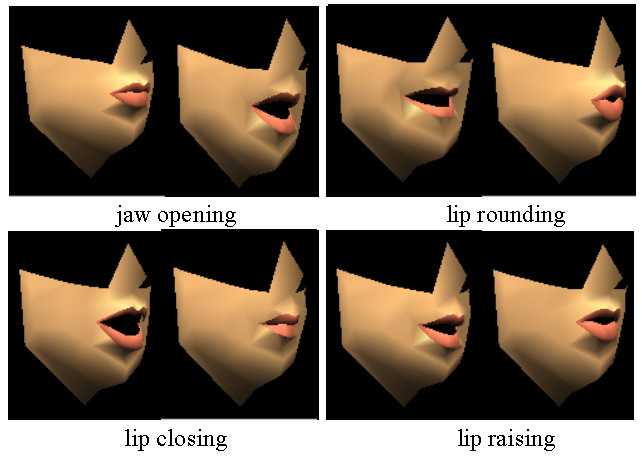

1. jaw opening,

2. jaw advance,

3. lips rounding,

4. lips closure,

5. lips raising (for fricatives such as /f/ and /v/),

6. glottal height.

The following figures show the 3D model superimposed on the speaker image, the 3D wireframe and a rendering by texture mapping.

This work has been supported by France Telecom Multimedia, with the collaboration of G.Bailly, P.Badin and P.Borel at the ICP.

Related articles

L. Reveret, C. Benoit

A New 3D Lip Model for Analysis and Synthesis of Lip Motion in Speech Production (PS.gz | PDF)

Proc. of the Second ESCA Workshop on Audio-Visual Speech Processing, AVSP'98, Terrigal, Australia, Dec. 4-6, 1998.

L. Reveret

Desgin and evaluation of a video tracking system of lip motion in speech production (PS.gz | PDF)

PhD dissertation, INPG, Grenoble, France, June 1999.

L. Reveret, G. Bailly, P. Badin

MOTHER: A new generation of talking heads providing a flexible articulatory control for video-realistic speech animation (PS.gz | PDF)

Proc. of the 6th Int. Conference of Spoken Language Processing, ICSLP'2000, Beijing, China, Oct. 16-20, 2000.